|

|

What is a Computer?Nowadays, the machine which is

sitting in front of you.

The machine which can draw graphics, set up your modem,

decipher your PGP, do typography, refresh your screen, monitor

your keyboard, manage the performance of all these in

synchrony... and do all of these through a single principle:

reading programs placed in its storage.

But the meaning of the word has changed in time. In the

1930s and 1940s "a computer" meant a person

doing calculations, and to indicate a machine

doing calculations you would say "automatic computer". In the

1960s people still talked about the digital

computer as opposed to the analog

computer.

As in my book, I'm going to use the word "computer" to

indicate only the type of machine which has swept everything

else away in its path: the computer in front of you, the

digital computer with "internally stored modifiable program."

That means I don't count the abacus or Pascal's adding

machine as computers, important as they may be in the history

of thought and technology. I'd call them "calculators."

I wouldn't even call Charles Babbage's 1840s Analytical

Engine the design for a computer. It didn't incorporate the

vital idea which is now exploited by the computer in the

modern sense, the idea of storing programs in the same form as

data and intermediate working. His machine was designed to

store programs on cards, while the working was to be done by

mechanical cogs and wheels.

There were other differences — he did not have electronics

or even electricity, and he still thought in base-10

arithmetic. But more fundamental is the rigid separation of

instructions and data in Babbage's

thought. |

Charles Babbage, 1791-1871

|

|

| A hundred years later, the analysis of logical operations,

started by George Boole, was much more advanced.

Electromagnetic relays could be used instead of gearwheels.

But no-one had advanced on Babbage's principle. Builders of

large calculators might put the program on a roll of punched

paper rather than cards, but the idea was the same: machinery

to do arithmetic, and instructions coded in some other form,

somewhere else, designed to make the machinery work.

To see how different this is from a computer, think of what

happens when you want a new piece of software. You can FTP it

from a remote source, and it is transmitted by the same means

as email or any other form of data. You may apply an UnStuffIt

or GZip program to it when it arrives, and this means

operating on the program you have ordered.

For filing, encoding, transmitting, copying, a program is no

different from any other kind of data — it is just a sequence

of electronic on-or-off states which lives on hard disk or RAM

along with everything else.

The people who built big electromachanical calculators in

the 1930s and 1940s didn't think of anything like this.

Even when they turned to electronics, they still thought of

programs as something quite different from numbers, and stored

them in quite a different, inflexible, way. So the ENIAC,

started in 1943, was a massive electronic calculating machine,

but I would not call it a computer in the modern sense.

Perhaps we could call it a near-computer.

|

More on near-computers, war and peaceThe Colossus was

also started in 1943 at Bletchley Park, heart of the British

attack on German ciphers (see this

Scrapbook page.)

I wouldn't call it a computer either, though some people

do: it was a machine specifically for breaking the "Fish"

ciphers, although by 1945 the programming had become very

sophisticated and flexible.

But the Colossus was crucial in showing Alan Turing the

speed and reliability of electronics. It was also ahead of

American technology, which only had the comparable ENIAC

calculator fully working in 1946, by which time its design was

completely obsolete. (And the Colossus played a part in

defeating Nazi Germany by reading Hitler's messages, whilst

the ENIAC did nothing in the war effort.)

1996 saw the fiftieth anniversary of the ENIAC. The

University of Pennsylvania and the Smithsonian

made a great deal of it as the "birth of the Information Age".

Vice-President Gore and other dignitaries were involved. Good

for them.

At Bletchley Park Museum, the Reconstruction

of the Colossus had to come from the curator Tony Sale's

individual efforts.

Americans and Brits do things differently. Some things

haven't changed in fifty years.

Another parallel figure, building near-computers, was Konrad

Zuse. Quite independently he designed mechanical

and electromechanical calculators, built in Germany before

and during the war. He didn't use electronics. He still had a

program on a paper tape. But he did see the importance of

programming and can be credited with the first programming

language, Plankalkul.

Konrad Zuse, 1910-1995 |

|

Like Turing, Zuse was an isolated innovator. But while

Turing was taken by the British government into the heart of

the Allied war effort, the German government declined Zuse's

offer to help with code-breaking machines.

The parallel between Turing and Zuse is explored by Thomas

Goldstrasz and Henrik Pantle.

Their work is influenced by the question: was the computer

the offspring of war? They conclude that the war hindered Zuse

and in no way helped.

In contrast, there can be no question that Alan Turing's

war experience was what made it possible for him to turn his

logical ideas into practical electronic machinery. This is a

great irony of history which forms the central part of his

story. He was the most civilian of people, an Anti-War

protester of 1933, very different in character from von

Neumann, who relished association with American military

power.

|

The Internally Stored Modifiable ProgramThe

breakthrough came through two sources in 1945:

- Alan Turing, with his own logical theory, and his

knowledge of the Colossus.

- the EDVAC report, by John von Neumann, but gathering a

great deal from ENIAC engineers Eckert and Mauchly

They both saw that the programs should be stored in

just the same way as data. Simple, in retrospect, but not at

all obvious at the time.

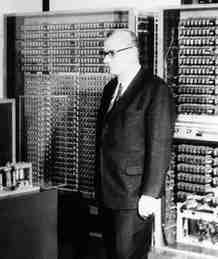

John von Neumann, 1903-1957John von Neumann

(originally Hungarian) was a major twentieth-century

mathematician with work in many fields unrelated to

computers.

|

|

The EDVAC Report became well known and well publicised, and

is usually counted as the origin of the computer in the modern

sense. It was dated 30 June 1945 — before Turing's report was

written. It also bore von Neumann's name alone, denying proper

credit to Eckert and Mauchly who had already seen the

feasibility of storing instructions internally in mercury

delay lines. (This dispute has been brought again to public

attention in the book ENIAC

by Scott McCartney. This strongly contests the viewpoint put

by Herman Goldstine, von Neumann's mathematical colleague, in

The

Computer from Pascal to von

Neumann. )

However, what Alan Turing wrote in the autumn of 1945 was

independent of the EDVAC proposal, and it was much further

ahead.

That's because he based his ideas on what he had seen in

1936 — the concept of the universal machine. In the abstract

universal machine of 1936 the programs were written on the

store in just the same way as the data and the working. This

was no coincidence. Turing's discoveries in mathematical

logic, using the Turing machine concept, depended on seeing

that programs operating on numbers could

themselves be represented as numbers.

But Turing's 1945 conception of the computer was not tied

to numbers at all. It was for the logical manipulation of

symbols of any kind. From the start he stressed that a

universal machine could switch at a moment's notice from

arithmetic to the algebra of group theory, to chess playing,

or to data processing.

Von Neumann was in the business of calculating for the

atomic bomb and for artillery tables, concerned with doing

massive amounts of arithmetic. Alan Turing came fresh from

codebreaking, work on symbols which wasn't necessarily to do

with arithmetic. He had seen a vast establishment built up

with special machines organised to do different tasks. Now,

Turing saw, they could all be replaced by programs for a

single machine. Further, he saw immediately the first ideas of

programming structure and languages.

|

Computer History on the WebThe Computer Museum History

Center has an extensive overview of computer history.

There are several other pages with huge lists of links to

other pages of computer history. There are for instance

But you should watch out — a

great deal of published material is wrong or highly

misleading. An example would be the Turing

entry on Professor Lee's site, which also appears as a

resource in the Yahoo listing. This writer fails to

distinguish the Universal Turing machine from the general

Turing machine concept; he believes the Colossus was used by

Turing on the Enigma and was 'essentially a bunch of

servomotors and metal'...

Some typical on-line summaries

- Tools

for Thought, an on-line book by Howard Rheingold, gives

an integrated view of all these developments, very readable,

though with inaccuracies (saying the Colossus was used on

the Enigma).

- The IEEE

Computer Society timeline of computer history,

completely excluding mention of British developments and of

Alan Turing.

- An encyclopaedia

history with the standard American line: the link from

Babbage to the ENIAC, the transition to stored program made

by the EDVAC. No mention of Alan Turing, but it does mention

the Colossus. It points out that since the Colossus was kept

secret it had no influence — which, in America, was indeed

the case.

- Short

lectures by Michelle Hoyle, University of Regina.

Includes a greater variety of topics, including a

discussion of the Turing machine concept, but doesn't

integrate it into the historical development.

- This

'timeline' gives another example of the completely

muddled names and dates so often to be found in summaries of

computer history.

Behind these confusions lies a basic disagreement about

what whether the origin of the computer should be placed in a

list of physical objects — basically the

hardware engineers' viewpoint — or whether it it belongs to

the history of logical, mathematical and scientific

ideas, as logicians, mathematicians and software

engineers would see it.

I take the second viewpoint: the essential point of the

stored-program computer is that it is built it to embody and

implement a logical idea, Turing's idea: the Universal Turing

machine of 1936. Turing himself always referred to computers

(in the modern sense) as 'Practical Universal Computing

Machines'. |

So who invented the computer?There are many different

views on which aspects of the modern computer are the most

central or critical.

- Some people think that it's the idea of using

electronics for calculating — in which case another American

pioneer, Atanasoff,

should be credited.

- Other people say it's getting a computer actually built

and working. In that case it's either the tiny prototype at

Manchester (1948), on the next

Scrapbook Page, or the EDSAC at

Cambridge, England (1949), that deserves greatest attention.

But I would say that in 1945 Alan Turing alone grasped

everything that was to change computing completely after that

date: the universality of his design, the emphasis on

programming, the exploitation of the stored program, the

importance of non-numerical applications, the apparently

open-ended scope for mechanising intelligence. He did not do

so as an isolated dreamer, but as someone who knew about the

practicability of large-scale electronics, with hands-on

experience.

The idea of one machine for every kind of task was

very foreign to the world of 1945. Even ten years later, in

1956, the big chief of the electromagnetic relay calculator at

Harvard, Howard Aiken, could write:

If it should turn out that the basic logics

of a machine designed for the numerical solution of

differential equations coincide with the logics of a machine

intended to make bills for a department store, I would

regard this as the most amazing coincidence that I have ever

encountered.

But that is exactly how it has turned out. It

is amazing, although we now have come to take

it for granted. But it's not a mere coincidence. It follows

from the deep principle that Alan Turing saw in 1936: the

Universal Turing Machine. |

The Electronic Brain - what went wrong?So why isn't

Alan Turing famous as the inventor of the computer?

History is on the side of the winner. In 1945 Alan Turing

could have felt like a winner. He was taken on by the National Physical Laboratory

at Teddington, in London suburbs. His detailed plan for an

electronic computer, with a visionary prospectus for its

capacities, was accepted in March 1946. Everything seemed to

be going for it.

Well, not quite everything. Turing's plan called for at

least 6k bytes of storage, in modern terms, and this was

considered far too ambitious.

And the Colossus electronic engineers, now returned to the

Post Office, were unable to make a quick start on the plan as

he had expected.

In late 1946 the NPL put out press releases which made it

perfectly clear that Turing's design was seen as a major

national project and outstanding innovation.

|

|

Some of the feats that

will be able to be performed by Britain's new

electronic brain, which is being developed at the

N.P.L., Teddington, were described to the SURREY COMET

yesterday by Dr. A. M. Turing, 34-year-old mathematics

expert, who is the pioneer of the scheme in this

country.

The machine is to be an improvement on the American

ENIAC, and it was in the brain of Dr Turing that the

more efficient model was developed....

From the local suburban newspaper, the Surrey Comet,

9 November 1946.

More text in my

book. | |

| But it was not to be. The rigid style of management meant

that nothing was built in 1947 or 1948 and virtually all his

ideas from this period, including the beginnings of a

programming language, were lost when he resigned from the NPL

in 1948. Another factor was that the complete secrecy about

the codebreaking operations meant that Turing could never draw

on his immense and successful experience, instead appearing as

a purely theoretical university mathematician.

Furthermore, he did not promote his ideas effectively. If

he had written papers on The Theory and Practice of Computing

in 1947, instead of going on to new ideas, he would have done

more for his reputation.

He didn't. He went running and he thought about what he saw

as the next step: Artificial Intelligence. Or rather, it was

for him not so much the next step, as the very thing which

made him interested in computers at all. The very idea of the

Turing machine in 1936 drew upon modelling the action of the

mind.

|

The Runner UpAlan Turing got very

depressed and angry about the NPL, and losing a race

against time. But he eased his frustration by becoming a

world class distance runner.

Go to this

Scrapbook Page to see the marathon

man. |

After 1948 almost everyone forgot that he had drawn up the

design for a computer in 1945-6, the most detailed design then

in existence. The mathematician M. H. A. (Max) Newman, when

writing his Biographical Memoir of Turing in 1955, passed over

this period with the words,

...many circumstances combined to turn his

interest to the new automatic computing machines. They were

in principle realizations of [Turing's] 'universal

machine'... though their designers did not yet know of

Turing's work. How could Newman have

forgotten that Turing himself was such a designer — in fact

the author of the very first detailed design — and that

obviously he knew of his own work? Alan Turing's reputation

has been subject to a strange kind of selective memory. Now,

the computer itself can help put matters right before your

eyes.

A page of detailed electronic design from

Turing's ACE report

|

Newman entirely neglected Turing's

origination of the computer, and this has done considerable

harm to Turing's subsequent reputation in computer science.

But his neglect reflects a pure mathematician's attitude which

perhaps holds a deeper truth. Newman's Biographical Memoir

lamented the fact that Turing was taken away from the

mathematics he was doing in 1938-39, his deepest work. He saw

the computer as a comparatively trivial offshoot, and a

diversion from what Turing should have done. Perhaps Turing

would have done something much greater had he followed the

Ordinal Logics work of 1938. Perhaps the war degraded his true

individual genius and left him doing things that others could

have done better. This is a point of view I now think I did

not give sufficient weight in my biography of

Turing. It is better reflected in my new text on Turing as

a philosopher.

After Turing resigned, a change in management at the NPL

meant that the ACE project could go ahead after all. A working

computer, based on his original design, was operating in 1950.

The 'Pilot

ACE' is now listed as one of the treasures of the Science Museum,

London. You can see it on the main ground

floor gallery of the Museum.

Alan Turing and the InternetAlan Turing proposed in

his 1946 report that it would be possible to use the ACE

computer by a remote user over a telephone link. So he foresaw

the combination of computing and telecommunications long

before others. He was never able to do anything about this,

but one of Turing's early colleagues at the NPL, Donald

W. Davies, went on to pioneer the principle of packet

switching and so the development of the ARPANET which led

to the Internet you are using now. |

| Meanwhile Alan Turing gave up on the NPL's slow procedures

and moved to Manchester in 1948.

The argument about who was first with the idea of the

computer continues there... |

| |